10-Week Syllabus

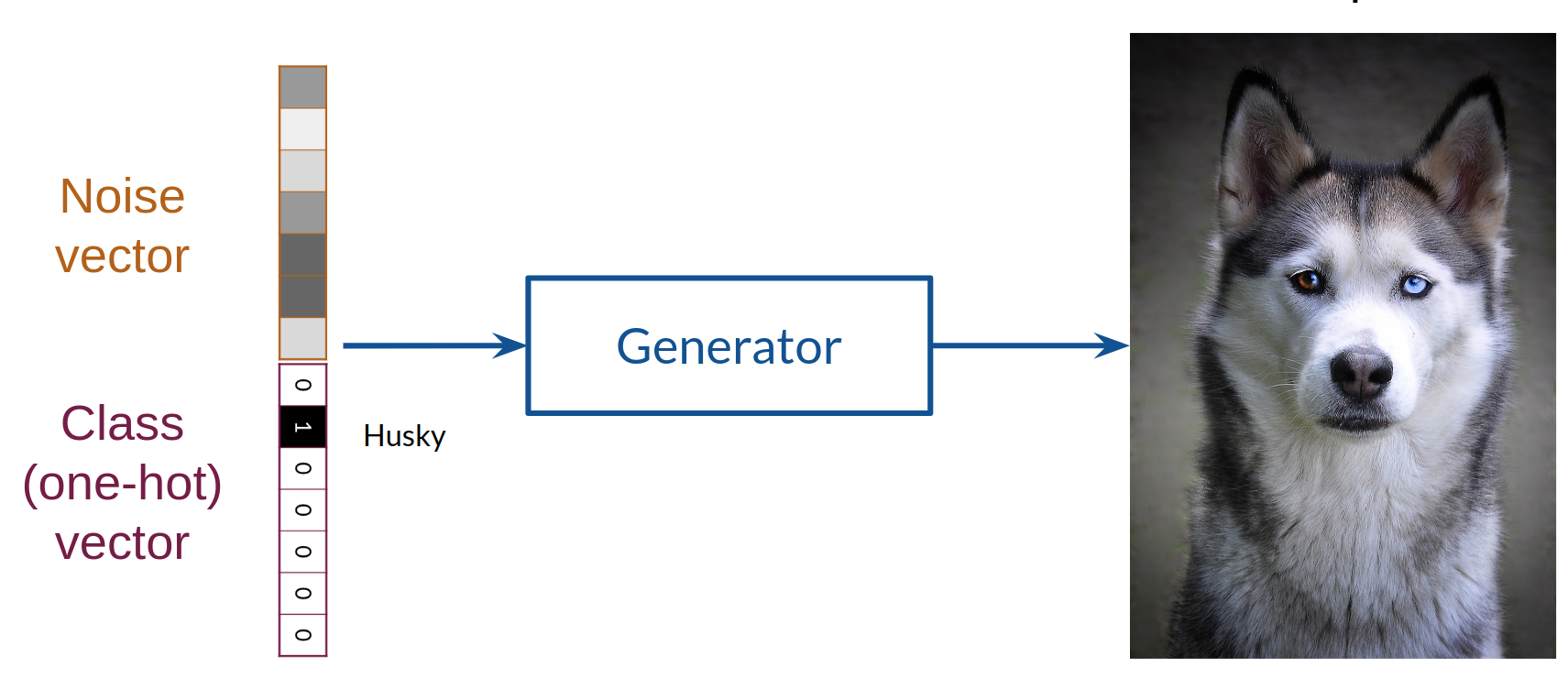

Week 1 : How to Make a Dog From Noise

Introduction to Generative Models & Building Your First GAN

- Introduction to Generative Models vs. Discriminative Models, and where GANs are situated in this context

- Intuition Behind GANs

- Role of the discriminator

- Role of the generator

- BCE loss

- Training vs. Inference

- Deep Convolutional GANs

- Review of Pytorch, convolutions, activation functions, batch normalization, padding & striding, pooling & upsampling, transposed convolutions

- Mode Collapse and Problems with BCE Loss

- Earth Mover’s Distance (Wasserstein Distance)

- Wasserstein-Loss

- Condition on W-loss Critic

- 1-Lipschitz Continuity Enforcement

Week 2 : Picking a Breed of Dog to Generate

Controllable Generation and Conditional GAN [Problem set 1 due]

- Conditional Generation: Intuition & Inputs

- Controllable Generation and how it is situated vis-a-vis Conditional Generation

- Vector Algebra in Latent Space

- Challenges with Controllable Generation

- Using Classifier Gradients for Controllable Generation

- Supervised disentanglement

- Evaluation: Inception Score, Frechet Inception Distance, HYPE, classifier-based evaluation of Disentanglement

- Challenges in Generative Model evaluation, particularly GANs, Importance of Evaluation

- Fidelity vs. Diversity Tradeoffs, Truncation Trick Sampling

- Inception Embeddings vs. Pixel Comparisons

- Inception Score: Intuition, Shortcomings

- Frechet Inception Distance: Intuition, Shortcomings

- Gold Standard in Fidelity (human-centered approach)

- Intuition of Precision vs. Recall in Generative Models

- Evaluating Disentanglement using the Classifier Method, Perceptual Path Length

Week 3 : Making High Quality Faces (and Other Complex Things)

Advancements in GANs and State of the Art Improvements for StyleGAN, Fine-tuning GANs [Problem set 2 due]

- Components of StyleGAN:

- Disentangled Intermediate Latent W-Space

- Noise Injection at Multiple Layers (Increased Style Supervision)

- Uncorrelated Noise for Stochasticity

- Adaptive Instance Normalization

- Progressive Growing

- StyleGAN2

- Bias

- Fine-tuning Large GANs, Pros/Cons

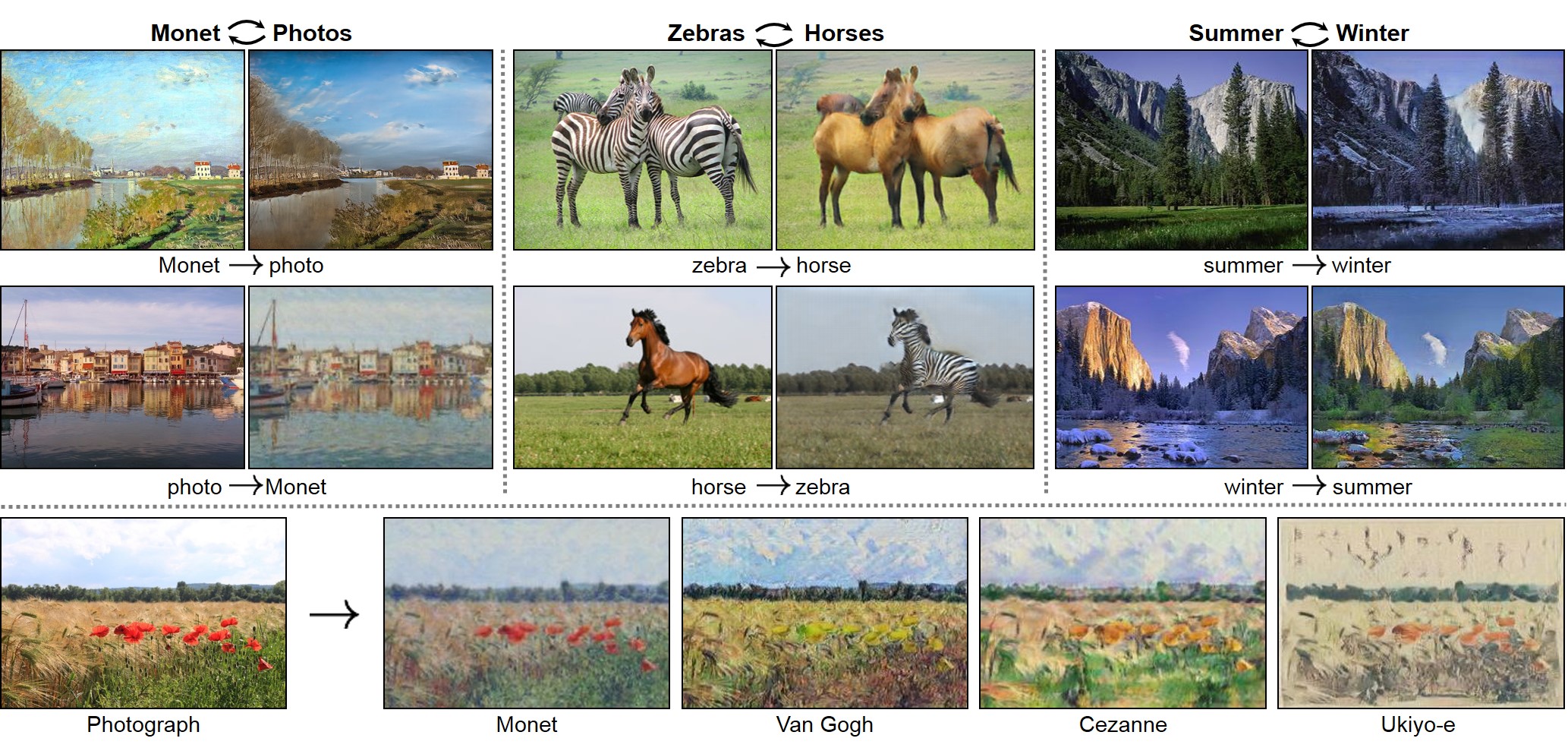

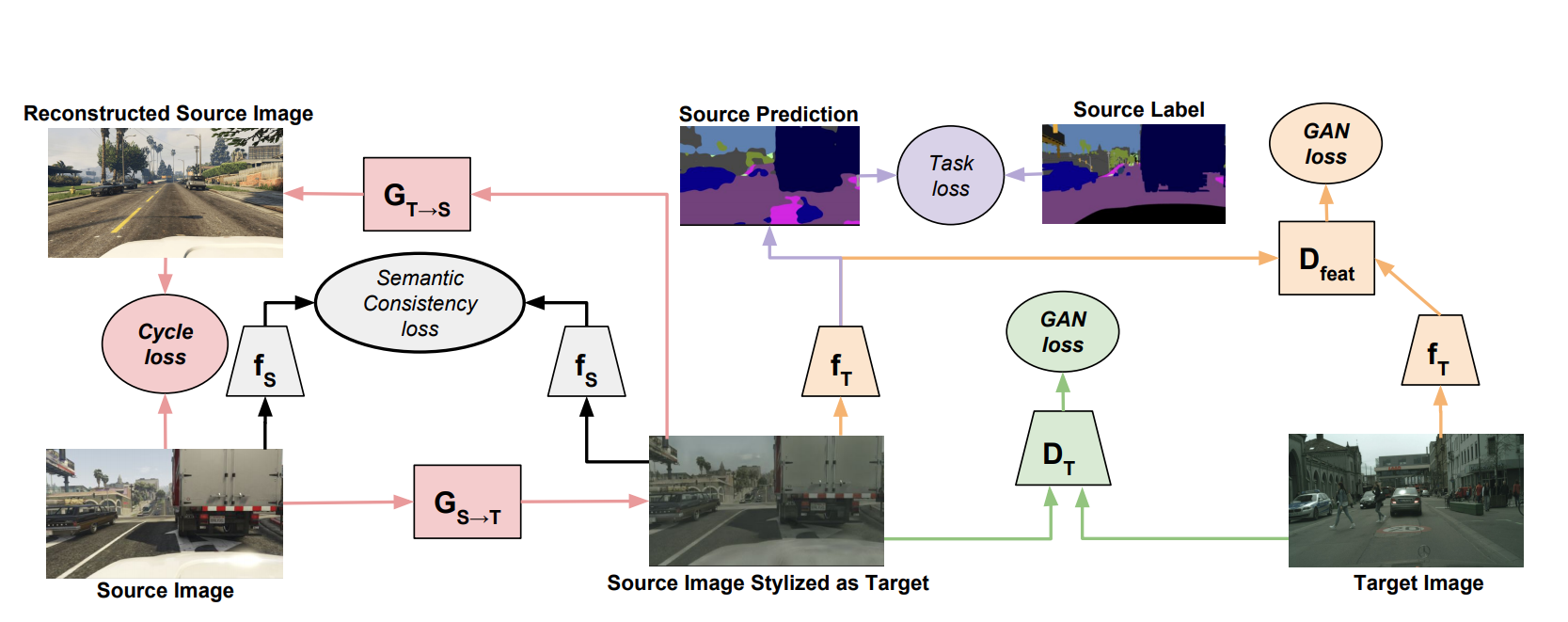

Week 4 : Changing Painters, Species, and Seasons

- Pix2Pix for Paired Image-to-Image Translation

- U-Net, Skip Connections

- PatchGAN

- CycleGAN for Unpaired Image-to-Image Translation

- Cycle Consistency

- Identity Loss

- Multimodal Generation:

- Shared Latent Space Assumption (UNIT)

- Extended to Multimodal (MUNIT)

- Beyond Image-to-Image: Other Translation Forms

- GauGAN: Instance Segmentation to Images

- Text-to-Image, Image-to-Text

- Musical-Notes-to-Melody

- Data augmentation

- Image Editing, In-painting, and GAN Inversion

- Image Editing, Photoshop 2.0

- Inverting a GAN, Challenges from Increasing Model Size, BiGAN

- GAN Inversion vs. Image Optimization

- Combining Inversion Techniques (“Warm Start”) with Optimization

Week 5 : Reading (and re-reading)

- Approach to Reading Research Papers: Skim Twice, Read Twice

- Model Diagrams and Common Representations

- Adjacent Areas of Research: adversarial learning, robustness/adversarial attacks

- Compelling applied areas and research:

- Healthcare

- Climate change

Week 6: Projects

- Meeting with Mentors for feedback and direction

Week 7: Projects

Week 8: Projects

Meeting with Mentors

Week 9: Projects

10: The Finale

CS236G

CS236G